Be more emotional.But not all the time. Use your intelligence to decide when. ;)&nb...

Wednesday, April 24, 2024

What's the value of reviewing a Draft PR?

Unless specifically requested, who's time is it the best use ...

Saturday, April 20, 2024

trivial feedback and micro-optimizations in code reviews

When these are given, is it because there is nothing more important to say?Or, does such feedback come from people who don't understand the bigger picture or want a quick way to show they've looked at the code without having to take the time and mental effort to truly understand ...

Tuesday, April 16, 2024

Before commenting

Are you just repeating what's already been said before?Where/when appropriate, are you correctly attributing others?Does your comment add value?Are you adding a new/different/unique perspective?Have you read the other comments first?Have you thought about who (possibly many people) will read the comme...

Monday, April 15, 2024

MAUI App Accelerator - milestone of note

In the last few days MAUI App Accelerator passed ten thousand "official" unique installs.This doesn't include the almost eight thousand installs included via the MAUI Essentials extension pack. (Installs via an extension pack are installed in a different way, which means they aren't included in the...

Saturday, April 13, 2024

"I'm not smart enough to use this"

I quite often use the phrase "I'm not smart enough to use this" when working with software tools.This is actually a code for one or more of the following:This doesn't work the way I expect/want/need.I'm not sure how to do/use this correctly.I'm disappointed that this didn't stop me from doing the wrong thing.I don't understand the error message (if any) that was displayed. Or the error...

Friday, April 12, 2024

Don't fix that bug...yet!

A bug is found.A simple solution is identified and quickly implemented.Sounds good. What's not to like?There are more questions to ask before committing the fix to the code base. Maybe even before making the fix.How did the code that required this fix get committed previously? Is it a failure...

Thursday, April 11, 2024

Formatting test code

Should all the rules for formatting and structuring code used in automated tests always be the same as those used in the production code?Of course, the answer is "it depends!"I prefer my test methods to be as complete as possible. I don't want too many details hidden in "helper" methods, as this means the details of what's being tested get spread out.As a broad generalization, I may have two helpers...

Tuesday, April 09, 2024

Varying "tech talks" based on time of day

I'll be speaking at DDD South West later this month.I'm one of the last sessions of the day. It's a slot I've not had before.In general, if talking at part of a larger event, I try to include something to link back to earlier talks in the day. Unless I'm first, obviously.At the end of a day of talks, where most attendees will have already heard five other talks that day, I'm wondering about including...

Monday, April 08, 2024

Comments in code written by AI

The general "best-practice" guidance for code comments is that they should explain "Why the code is there, rather than what it does."When code is generated by AI/LLMs (CoPilot and the like) via a prompt (rather than line completions), it can be beneficial to include the command (prompt) provided to the "AI". This is useful as the generated code isn't always as thoroughly reviewed as code written by...

Sunday, April 07, 2024

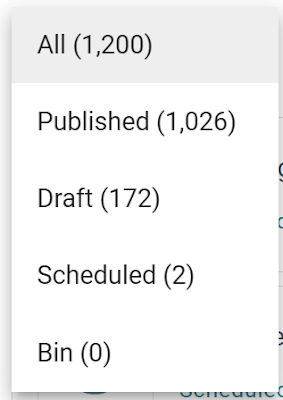

Reflecting on 1000+ blog posts

These are the current numbers for my blog. (Well, at the time I first started drafting this. I expect it will be several days before it's posted and then they will be different.)These are the numbers I care about.The one most other people care about is 2,375,403. That's the number of views the articles...

Friday, April 05, 2024

Does code quality matter for AI generated code?

A large part of code quality and the use of conventions and standards to ensure its readability has long been considered important for the maintainability of code. But does it matter if "AI" is creating the code and can provide a more easily understandable description of it if we really need to read and understand it?If we get good enough at defining/describing what the code should do, let "AI" create...

Thursday, April 04, 2024

Where does performance matter?

Performance matters.Sometimes.In some cases.It's really easy to get distracted by focusing on code performance.It's easy to spend far too much time debating how to write code that executes a few milliseconds faster.How do you determine/decide/measure whether it's worth discussing/debating/changing some code if the time spent thinking about, discussing, and then changing that code takes much more time...

Wednesday, April 03, 2024

Thought vs experiment

Scenario 1: Consider the options and assess each one's impact on usage before shipping the best idea.Scenario 2: Ship something (or multiple things) and see what the response is. Then iterate and repeat.Both scenarios can lead to the same result (in about the same amount of time.)Neither is necessarily better than the other. However, it doesn't often feel that way.Always using one isn't...

Monday, April 01, 2024

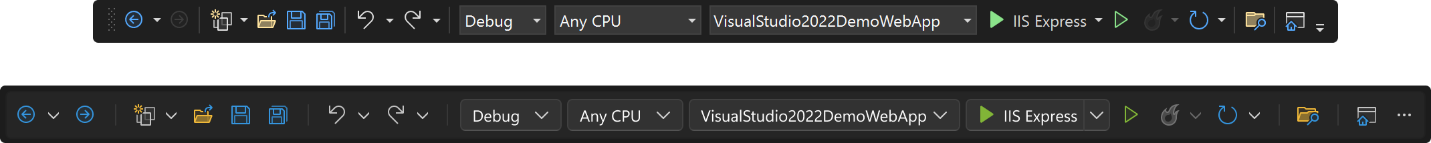

The Visual Studio UI Refresh has made me more productive

Visual Studio is having a UI refresh. In part, this is to make it more accessible.I think this is a very good thing. If you want to give feedback on another possible accessibility improvement, add your support, comments, and thoughts here.Anyway, back to the current changes.They include increasing...

when the developers no longer seem intelligent

There are companies where the managers don't know what developers do. They have some special knowledge to make the computers do what the business needs. Sometimes, these managers ask the developers a question, and they give a technical answer the manager doesn't understand.Very soon (maybe already), the developers will be using AI to do some of the technical work the company wants/needs.Eventually,...