Wednesday, March 29, 2023

You say "it's too complicated to test" but...

Monday, March 27, 2023

Dear developer, how will you know when you're finished?

That thing you're working on:

- How are you measuring progress?

- Are you making progress?

- Do others depend on what you're doing and need to know what you'll produce and when it will be finished?

- How will others test it when you've finished?

- How do you know you are going in the right direction?

- How will you know when it's done?

You should probably work this out before you start!

A few years ago, I was invited to give a talk at university. I was asked to talk about how to think about building software. Very vague, but I interpreted it as a reason to talk about the things I think about before starting to code.

Among the things I mentioned was the Definition of Done. (The best thing Scrum has given us.)

The students had never thought about this before.

The lecturers/tutors/staff had never thought of this before.

If you're not familiar, the idea is to specify/document how you'll know that you're finished. What are the things it needs to do? When they're all done, you've finished.

They added it as something they teach as part of the curriculum!

Without knowing when you're done, it's hard to know when to stop.

Without knowing when you're done, you risk stopping too soon or not stopping soon enough.

I like causing tests to fail

I like it when tests start failing.

Not in a malicious and wanting to break things way.

I like it when I make a change to some code and then tests fail.

This could seem counterintuitive, and it surprised me when I noticed it.

If an automated test starts failing, that's a good thing. It's doing what it's meant to.

I'd found something that didn't work.

I wrote a test.

I changed the code to make the test pass.

Ran all the tests.

Saw that some previously passing tests now failed.

I want to know this as quickly as possible.

If I know something is broken, I can fix it.

The sooner I find this out, the better, as it saves time and money to find and fix the issue.

Compare the time, money, and effort involved in fixing something while working on it and fixing something once it's in production.

Obviously, I don't want to be breaking things all the time, but when I do, I want to know about it ASAP!

Sunday, March 26, 2023

Aspects of "good code" (not just XAML)

I previously asked: what good code looks like?

Here's an incomplete list.

It should be:

- Easy to read

- Easy to write

- Clear/unambiguous

- Succinct (that is, not unnecessarily verbose)

- Consistent

- Easy to understand

- Easy to spot mistakes

- Easy to maintain/modify

- Have good tooling

In thinking about XAML, let's put a pin in that last point (as we know, XAML tooling is far from brilliant) and focus on the first eight points.

Does this sound like the XAML files you work with?

Would you like it to be?

Have you ever thought about XAML files in this way?

If you haven't ever thought of XAML files in terms of what "good code looks like" (whether that's the above list or your own), is that because "all XAML looks the same, and it isn't great"?

Saturday, March 25, 2023

Is manual testing going to become more important when code is written by AI?

It's part concern over the future of my industry, part curiosity, and part interest in better software testing.

|

| AI generated this image based on the prompt "software testing" |

As the image above shows, it's probably not the time to start panicking about AI taking over and making everyone redundant, but it is something to be thinking about.

If (and it might be a big "if") AI is going to be good at customer support and writing code, it seems there's still a big gap for humans in the software support, maintenance, and improvement process.

In that most software development involves spending time modifying existing code rather than writing it from scratch, I doubt you'll argue with me that adding features and fixing bugs is a major part of what a software developer does.

This still leaves a very important aspect that requires human knowledge of the problem space and code.

Won't we, at least for a long time, still need people to:

- Understand how the reported issue translates into a modification to code.

- Convert business requirements to something that can be turned into code.

- Verify that the code proposed (or directly modified) by AI is correct and does what it should?

Having AI change code because of a possible exception is quite straight-forward. Explaining a logic change in relation to existing code could be very hard without an understanding of the code.

And, of course, we don't want AI modifying code and also verifying that it has done so correctly because it can't do this reliably.

There's also a lot of code that isn't covered by automated tests and/or must be tested by humans/hand.

Testing code seems like a job that won't be threatened by AI any more than other software development-related jobs. It's another tool that testers can use. Sadly I know a lot of testers rely heavily on manual testing, and so will be less inclined to use this tool when they aren't using any of the others already available to them.

Wednesday, March 22, 2023

5 reasons WE SHOULD think about how XAML could be better

- Working with XAML can be hard.

- Working with XAML can be slow.

- There is "limited" tooling for working with XAML files.

- There is a lot of existing code that uses XAML, which needs to be supported, maintained, and updated in the future.

- It is still the most mature option for building native User Interfaces for desktop and mobile apps with .NET.

5 reasons NOT to think about how XAML could be better

- Because no one else is thinking (or talking or writing) about it.

- Because that's just the way it is.

- Because if it could be better, Microsoft would have already done it.

- Because we could use another language instead.

- Because we're too busy doing the work to think about how the work could be better.

- Bonus: Because if we realize there's a better way, we might have to admit what we've been doing all this isn't the best.

I didn't say they were good reasons....

Thursday, March 16, 2023

Microsoft's Ability Summit has inspired me, again!

Microsoft's Ability Summit was last week, and it has, once again, inspired me to do more work on the tools I have in progress.

You can register via the above link to get to the official site, but the videos are also now available on YouTube.

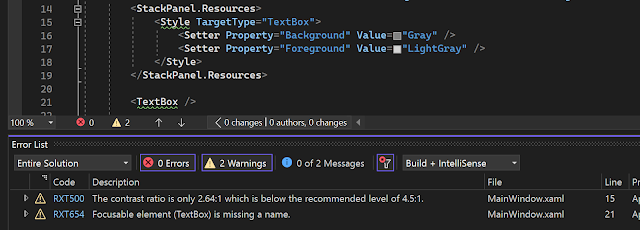

It was inspiring to see the recently announced Accessibility Checker inside Visual Studio in action. It also showed me that it does have value. I had doubts about this as it's functionality that already exists elsewhere.

This new tool allows you to run the same tests as can be run by Accessibility Insights, but from a button in the in-app (debug) toolbar (or Live Visual Tree window.)

I've got in the habit of using Accessibility Insights directly or via automated testing* to perform basic testing of an application.

* Yes, I have tests that run an app, navigate to each page, run accessibility tests against that page, and check for (& report) failures. It's not perfect, but it's much better/faster/reliable than doing it manually.

It reminded me that I have code that can do much of this on source code.

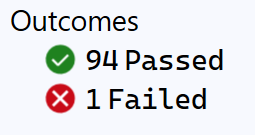

Like this:

When the benefit of Visual Studio having an Integrated Accessibility Checker in the debug experience is that it makes issues easier for developers to see and fix because they don't have to go to another tool. And it makes them quicker and easier to fix by "shifting left" to be part of the debugging experience; how much better to shift further left to when the code is being written (before getting to the debugging step) and not putting the functionality behind another tool that must be known about and run but putting the information where developers are already looking?

I really must hurry up and get this in a state that I can make it public.

It's not as complete as the other tooling as it can't catch quite as many issues, but I think there's value in catching (and fixing) even some issues earlier.

"It's as pretty as I'm able to make it" - An excuse that shows an opportunity

When a developer gives a demo that shows code with a UI, they invariably make no effort to make it look in any way visually appealing or show any indication they've done anything more than put controls on the screen.

They'll also excuse their lack of effort on the basis that they don't have the skills, knowledge, or experience to do any better. Also, maybe with a subtle joke that designers are somehow lesser. :(

With a little effort, presenters could significantly distinguish themselves from "all" the others who don't put in any effort here.

It would also be a great opportunity for showing something that easily makes a basic UI look better than many do by default.

My cogs are whirring.....

Monday, March 13, 2023

Please help improve the extensibility experience in Visual Studio

You can then click on the "Install" text link, and it will install the things you are missing.

But what if you want to require (or recommend) that an extension is installed to work with the solution?

Well, then you're stuck.

You're forced to include this in the documentation and hope that someone looks at them. Ideally when setting up their environment, or--more likely--when things aren't working as expected and they want to know why.

Obviously, this is far from ideal and can be a barrier (I think) to the creation of specific extensions to fill the gaps in individual projects. - I have had multiple discussions where custom extensions were ruled out because it is too difficult to get everyone working on the code base to install the extensions. We ended up with sub-standard solutions requiring documentation ad custom scripts. :(

Wouldn't it be great if the existing infrastructure for detecting missing components could be extended to detect missing extensions too?

Well, it's currently under consideration. Maybe you've got a moment to help highlight the need for this by giving the suggestion an upvote. 👍

Thank you, please. :)

Tuesday, March 07, 2023

Do you know what good code looks like?

It's a serious question!

If you don't know what good code looks like, how do you know what you're doing is good?

What if it's not good?

What constitutes as good?

These are important questions when creating reliable, maintainable, high-quality code.

This applies to any code or programming language, but especially to XAML.

The above quote was from a member of the audience after one of the first times I gave a talk about rethinking XAML. I now use it as an audience prompt in current talks.

I've met very few people who work with XAML who have given serious thought to what good XAML looks like.

Most XAML files look the same.

And they don't look great.

They're not easy to read.

They're not easy to understand.

I don't think the solution is abandoning XAML. (Especially as there's so much existing code that needs to be supported, maintained, improved, and enhanced)

I think the solution is to change the way we write XAML.

More on this to follow...

In the meantime, what do you think "good XAML" looks like?