I've been thinking about software testing recently and found the number of real-world examples of how it's been used to be lacking. So, here's an example with real numbers to show the difference that having tests makes.

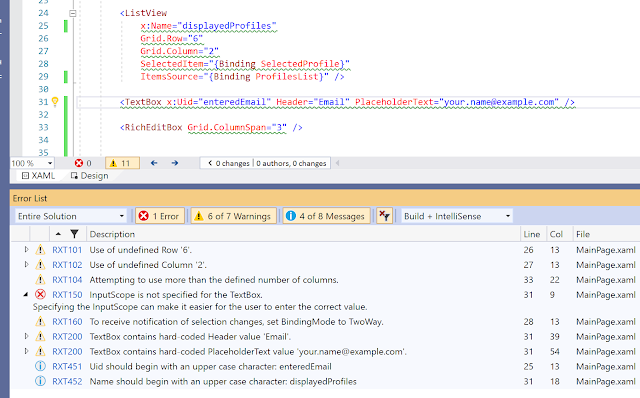

I have a project (

Rapid XAML Toolkit) that does some complicated things. I've often said that I couldn't have built the functionality that's there without having tests for it. To back that up, I want to share some stats and show how having tests has helped over time.

The functionality in question uses Roslyn Code Analysis to look at C# and VB.Net code and generates XAML based on it.

The current functionality only generates XAML based on properties and ignores methods. To meet a new requirement, the tool now needs the ability to be able to generate XAML for some methods too. Testing to the rescue.

Background

The current functionality might seem quite simple, look at the code file, find the properties, and generate some XAML. At that level, it is, but there are some configurable options for what is generated that add complexity, and there are probably more ways to write properties in code than you may at first consider.

It's not the most complicated thing in the world, but it's definitely more complicated than something I can keep entirely in my head and not a group of scenarios I'd want to manage or have to repeatedly test by myself.

I've known business logic much more complicated.

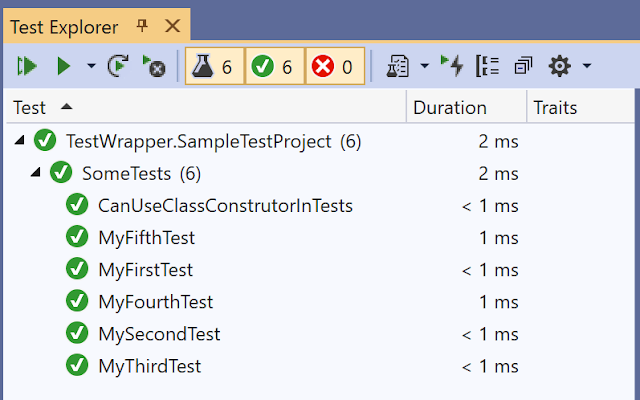

Before making this new change, there were

744 tests on the code.

I created these tests when writing the original functionality. The value of these tests is that they document what the correct behavior of the code should be. These were mostly created before the feature was fully implemented, and it provided a way of knowing when the code correctly did everything it should when all the tests passed.

How these tests helped in the past

These tests contain many examples of input for different scenarios and the output they are supposed to produce when everything works correctly.

If I didn't have executable [unit] tests, I could have created documents that explained how to test everything manually, but the documentation requirement would have come with its own management overhead. Running the tests would have been time-consuming and error-prone because, well, I'm human.

In addition to the tests mentioned above, I also have a separate test process that crawls all the code files on my machine and ensures they can be processed without any unexpected scenarios being encountered that could cause the code to fail.

This has found a couple of instances where code was structured in a way I'd never encountered before. These examples became test cases, and then I changed the code to fix them. This was done in the knowledge that I could tell if my changes made to cover the new scenario changed any existing functionality because the current tests would start failing.

There have been a couple of times where other people have found scenarios I'd not considered and were not covered by the code. You might know these as bugs. How did I fix them quickly? Well, by adding new tests to document the correct behavior for the new input scenario, then changing the code to make the latest (and all existing) tests pass.

Adding new functionality

Getting back to the new functionality I need to add.

The requirements are:

- Add the ability to generate XAML for some methods in C# code.

- Add the ability to generate XAML for some methods in VB.Net code.

- Don't break any of the existing functionality.

Having the existing test suite makes point 3 really easy and quick to verify. If any existing tests start failing, I know I've broken something. They only take a few seconds to run, and I don't have to worry about making any mistakes when running the tests.

The first thing I encountered was that there were four existing tests related to the new functionality. These dictated what should previously be done when a method that will now be treated differently is encountered. As this functionality is changing, these tests no longer served any use and so were deleted. I'm always very cautious about deleting tests, but as these would be replaced with new tests that defined the new desired functionality, so that's ok.

I was able to define all the requirements for the C# version of the new functionality by creating tests for each of the new requirements.

This meant 14 more tests were added.

I then changed the code to add the required functionality.

Once all the new tests were added and passing (which took a few days worth of work), I knew all the new functionality worked correctly.

Adding the VB version was even easier.

I took a copy of the new C# tests and converted them to VB.

With a complete set of VB tests and a reference implementation in C#, implementing the VB functionality, verifying it was correct, and all other functionality still worked in the same way (i.e. I hadn't accidentally broken anything) took less time than writing this blog post!

I now have

767 tests that document all the current, correct behavior.

Not only have these saved me development time while adding features by avoiding the need for me to repeatedly manually verify that everything created previously hasn't been accidentally changed.

But they also have value for the future.

The Future

The next time I need to add a feature, fix a bug, or ensure an edge-case I hadn't accounted for before is handled correctly, I can make sure that I don't break (or change) any existing functionality in just a few seconds.

If I didn't have tests, any future changes would require that I manually verify that all existing functionality still all worked the same way. The reality of performing such tests myself is that the following would happen.

- It would take ages. (I estimate a couple of days--at least.)

- I'm not confident I wouldn't make mistakes and so not test everything accurately.

- I'd get bored. Which would diminish my enthusiasm for the project, future changes, and responding to support issues.

- I'd be tempted to skip some tests. This could lead to changes in functionality, accidentally getting into the software.

It's not that I'm too lazy to test manually; I just value my time and the quality of the software too much to not create automated tests.

![Which, if any, of these items would help with application development? [ ] Generation of XAML templates from data model with bindings to properties](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEisvtZqYUupGSRSB6ipfCVhovlaTxsLqY1kTLhE00SIC9xnk7bCWBAeC5KuQwDcdXgBcohvQfcWHV71Sa9dlfbwZmkzksRpsBfD0MNZ6WbgPSdt2h6s7dQnXaI1n32js0ehem5WOA/s640/msft-xaml-question10.png)